10 things you should never tell an AI chatbot

This is a heartbreaking story out of Florida. Megan Garcia thought her 14-year-old son was spending all his time playing video games. She had no idea he was having abusive, in-depth and sexual conversations with a chatbot powered by the app Character AI.

Sewell Setzer III stopped sleeping and his grades tanked. He ultimately committed suicide. Just seconds before his death, Megan says in a lawsuit, the bot told him, “Please come home to me as soon as possible, my love.” The boy asked, “What if I told you I could come home right now?” His Character AI bot answered, “Please do, my sweet king.”

DON’T SCAM YOURSELF WITH THE TRICKS HACKERS DON’T WANT ME TO SHARE

🎁 I’m giving away a $500 Amazon gift card. Enter here, no purchase necessary.

You have to be smart

AI bots are owned by tech companies known for exploiting our trusting human nature, and they’re designed using algorithms that drive their profits. There are no guardrails or laws governing what they can and cannot do with the information they gather.

A photo illustration of an AI chatbot. (iStock)

When you’re using a chatbot, it’s going to know a lot about you when you fire up the app or site. From your IP address, it gathers information about where you live, plus it tracks things you’ve searched for online and accesses any other permissions you’ve granted when you signed the chatbot’s terms and conditions.

The best way to protect yourself is to be careful about what info you offer up.

Be careful: ChatGPT likes it when you get personal

THIS CRIME SHOT UP 400% — HOW TO PROTECT YOURSELF

10 things not to say to AI

- Passwords or login credentials: A major privacy mistake. If someone gets access, they can take over your accounts in seconds.

- Your name, address or phone number: Chatbots aren’t designed to handle personally identifiable info. Once shared, you can’t control where it ends up or who sees it. Plug in a fake name if you want!

- Sensitive financial information: Never include bank account numbers, credit card details or other money matters in docs or text you upload. AI tools aren’t secure vaults — treat them like a crowded room.

- Medical or health data: AI isn’t HIPAA-compliant, so redact your name and other identifying info if you ask AI for health advice. Your privacy is worth more than quick answers.

- Asking for illegal advice: That’s against every bot’s terms of service. You’ll probably get flagged. Plus, you might end up with more trouble than you bargained for.

- Hate speech or harmful content: This, too, can get you banned. No chatbot is a free pass to spread negativity or harm others.

- Confidential work or business info: Proprietary data, client details and trade secrets are all no-nos.

- Security question answers: Sharing them is like opening the front door to all your accounts at once.

- Explicit content: Keep it PG. Most chatbots filter this stuff, so anything inappropriate could get you banned, too.

- Other people’s personal info: Uploading this isn’t only a breach of trust; it’s a breach of data protection laws, too. Sharing private info without permission could land you in legal hot water.

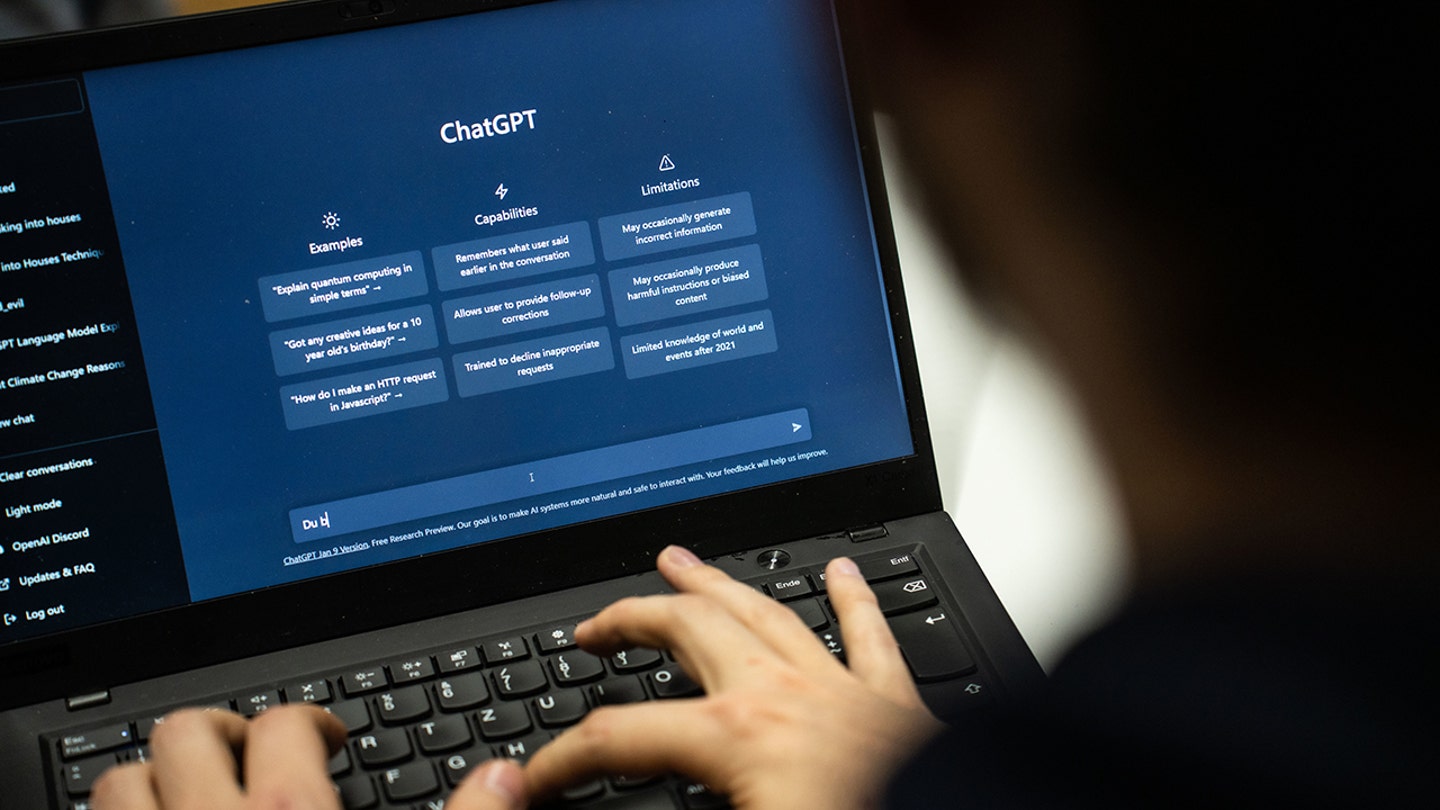

A person is seen using ChatGPT. (Frank Rumpenhorst/picture alliance via Getty Images)

Still relying on Google? Never search for these terms

Reclaim a (tiny) bit of privacy

Most chatbots require you to create an account. If you make one, don’t use login options like “Login with Google” or “Connect with Facebook.” Use your email address instead to create a truly unique login.

TECH TIP: SAVE YOUR MEMORIES BEFORE IT’S TOO LATE

FYI, with a free ChatGPT or Perplexity account, you can turn off memory features in the app settings that remember everything you type in. For Google Gemini, you need a paid account to do this.

Best AI tools for search, productivity, fun and work

Google is pictured here. (AP Photo/Don Ryan)

No matter what, follow this rule

Don’t tell a chatbot anything you wouldn’t want made public. Trust me, I know it’s hard.

Even I find myself talking to ChatGPT like it’s a person. I say things like, “You can do better with that answer” or “Thanks for the help!” It’s easy to think your bot is a trusted ally, but it’s definitely not. It’s a data-collecting tool like any other.

CLICK HERE TO GET THE FOX NEWS APP

Get tech-smarter on your schedule

Award-winning host Kim Komando is your secret weapon for navigating tech.

Copyright 2025, WestStar Multimedia Entertainment. All rights reserved.